The private cloud failures of the last decade weren't technical problems—they were mindset problems.

3:47 AM, Sunday

Your phone buzzes on the nightstand. A PagerDuty alert:

CRITICAL: api-prod-03 not responding

You drag yourself out of bed, open your laptop, and SSH into the server. It's unresponsive. Reboot doesn't help. The disk is throwing I/O errors.

This server—you called it "snorlax" because you named all your servers after Pokémon—has the only copy of a critical configuration script. You don't have backups. The documentation is in a Confluence page that hasn't been updated in 18 months.

Your team spends the next 6 hours recovering the service. You swear you'll "fix this properly" once things are stable.

You never do.

This is the "snowflake" problem. And it's holding your infrastructure back.

The Pet Pathology

Traditional infrastructure suffers from what we call the "Special Snowflake" problem:

| Symptom | Root Cause | Consequence |

|---|---|---|

| Server requires manual care | Unique configuration, built by hand | Fear of reboot, no disaster recovery |

| "Works on my machine" | Configuration drift between environments | Failed deployments, production bugs |

| Undocumented fixes | Tribal knowledge, no audit trail | Knowledge loss when staff leave |

| Scaling takes weeks | Manual provisioning processes | Business agility |

The private cloud failures of 2015-2020 weren't caused by bad technology. They were caused by treating infrastructure as pets—what we in the industry call "snowflakes." These are unique, hand-crafted systems that require constant manual attention and care.

In 2026, this approach is no longer acceptable.

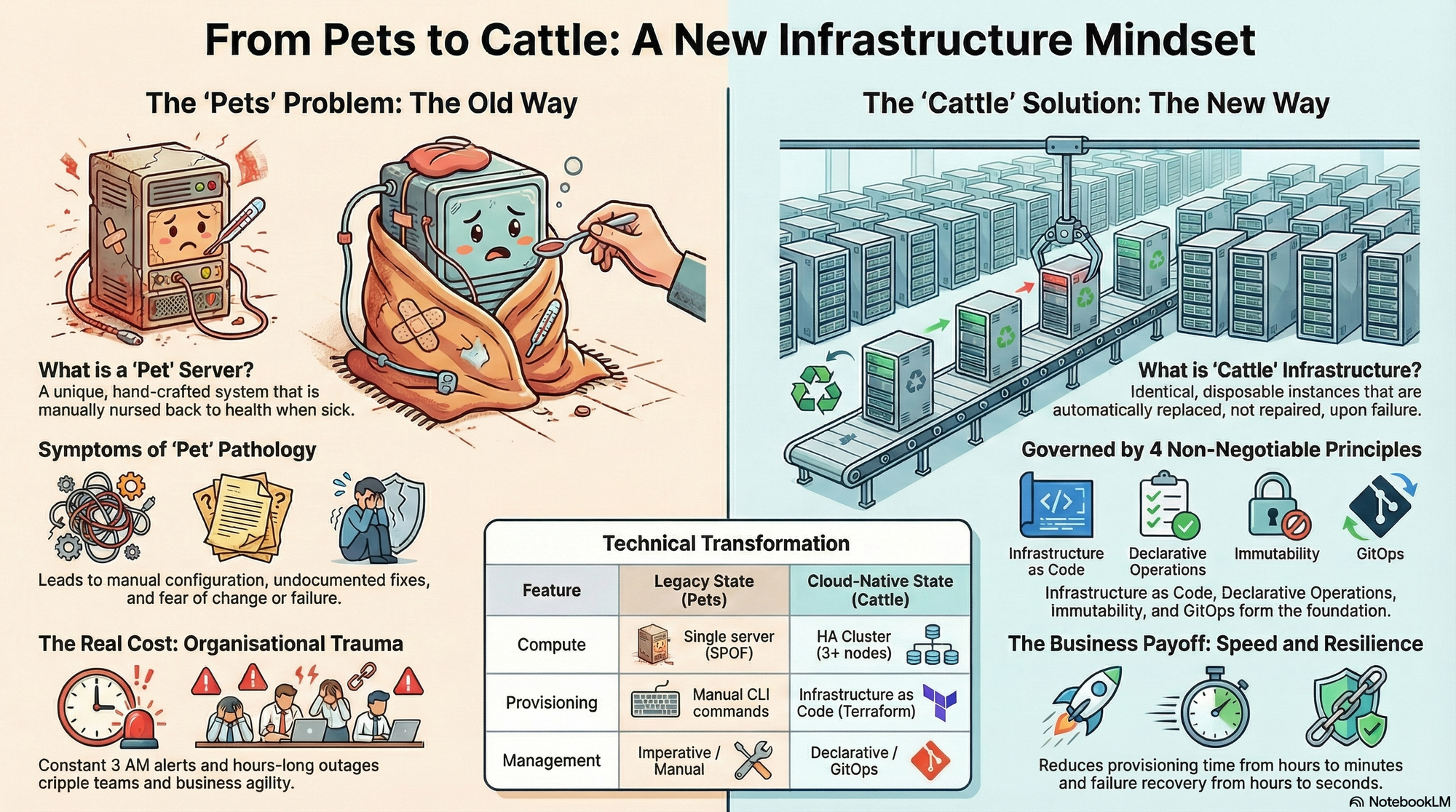

Pets vs Cattle: A Mindset Shift

The solution is the "Cattle" philosophy—a metaphor that's become standard in our industry:

Pets: Unique names, carefully nurtured, individually cared for.

Cattle: Identical instances, replaced on failure, no individual value.

Note: We're not literally talking about animals here. This is industry shorthand for two very different approaches to infrastructure.

This isn't just a cute analogy—it's a fundamental rethinking of how we relate to infrastructure.

What Cattle Infrastructure Looks Like

In a cattle-based infrastructure:

- Servers are disposable: When one fails, you replace it. You don't repair it.

- Configuration is code: Every component is defined in version-controlled files.

- Operations are declarative: You describe the desired state, not the steps to get there.

- Changes are auditable: Git is the single source of truth.

The contrast isn't subtle. Here's the transformation:

| Feature | Legacy State (As-Is) | Cloud-Native State (To-Be) |

|---|---|---|

| Compute | Single server (SPOF) | HA Cluster (3+ nodes) |

| Provisioning | Manual CLI commands | Infrastructure as Code (Terraform/Pulumi) |

| Networking | Static host configs | Dynamic load balancing with VIP |

| Storage | Local ephemeral disk | Distributed replicated block |

| Management | Imperative / Manual | Declarative / GitOps |

The Economic Case

Let's talk about money, because that's what usually drives change.

A manual server provisioning process might take 2-3 hours. Multiply that by every server you provision, every time you need to scale, every disaster recovery scenario you fumble through.

Now consider:

- Automation reduces provisioning time from hours to minutes

- Declarative operations eliminate configuration drift (no more "works on my machine")

- Git-based workflows provide full audit trails (compliance becomes easier, not harder)

- Immutable infrastructure reduces failure recovery from hours to seconds (replace, don't repair)

The real cost isn't in the tooling—it's in the organizational trauma of pets. Every 3AM page, every undocumented fix, every "we'll document it later" promise—that's debt you're paying.

The Non-Negotiable Principles

If you want to build private cloud infrastructure that actually scales, these principles are non-negotiable:

1. Infrastructure as Code

Every component—servers, networks, storage, applications—must be defined in version-controlled files. No hand-crafted configurations. No "I'll just SSH in and fix this."

If it's not in Git, it doesn't exist.

2. Declarative Operations

Describe what you want, not how to get there.

Imperative (bad):

# Don't do this

kubectl run nginx --image=nginx

kubectl expose deployment nginx --port=80

kubectl apply -f some-random-manifest.yaml

Declarative (good):

# Do this

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

replicas: 3

# ... desired state defined here

The declarative approach is self-healing. If something drifts from the desired state, the system fixes it automatically.

3. Immutable Infrastructure

Replace, don't repair.

When a server fails:

- Old way: SSH in, diagnose, patch, reboot, pray

- New way: Terminate, provision new instance from known-good image

This eliminates configuration drift and reduces recovery time from hours to minutes.

4. GitOps

Git is the single source of truth. All changes happen through pull requests. The cluster state is automatically synchronized with Git.

This means:

- Full audit trail: Every change is tied to a commit and a PR

- Rollback is trivial: Revert the commit, the cluster reverts

- No direct CLI access in production: All changes go through Git

Why Bare Metal Matters

Here’s a controversial position: We’re going all‑in on bare‑metal Kubernetes.

In 2025, mature Type‑1 hypervisors deliver near‑native performance for most production workloads, with typical CPU overhead in the low single digits (around 1–5%) and memory overhead usually under about 5–8% compared to bare metal on properly configured systems.

For stable, high‑utilization private clouds with predictable workloads, bare metal can reduce total cost of ownership by on the order of 20–30% and deliver noticeably better throughput and latency, especially for CPU‑ and I/O‑intensive applications, though exact gains are highly workload‑ and provider‑dependent.

Yes, this means you need to handle OS updates and hardware provisioning. But that’s not a bug—it’s a feature. You control your security posture from the metal up, avoid the noisy‑neighbor effect common in multi‑tenant virtualized environments, and reduce exposure to hypervisor‑level vulnerabilities and vendor lock‑in.

When VMs Still Make Sense

Footnote: VMs are still the right choice for multi-tenant scenarios where you need strong isolation between teams. If you're building an internal platform with untrusted workloads, KVM/QEMU provides the isolation you need. But for a dedicated private cloud running your own workloads? Bare metal wins.

The Resource Reality Check

Let's talk about what you actually need to build this. Here's our resource scaling matrix:

| Scale | CPU | RAM | Storage | Network | Use Case |

|---|---|---|---|---|---|

| Lab | 8-16 cores | 32-64 GB | 500 GB - 1 TB | 1 Gbps | Learning, testing |

| Team | 32-64 cores | 128-256 GB | 2-5 TB | 10 Gbps | Small team apps, CI/CD |

| Department | 64-128 cores | 256-512 GB | 10-20 TB | 10-25 Gbps | Internal tools, services |

| Enterprise | 256+ cores | 1 TB+ | 50 TB+ | 25+ Gbps | Production workloads |

Notice what's missing? There's no "single server" tier. That's because single-server infrastructure is not infrastructure—it's a hobby project.